Severe Publication Bias Contributes to Illusory Sleep Consolidation in the Motor Sequence Learning Literature

Abstract

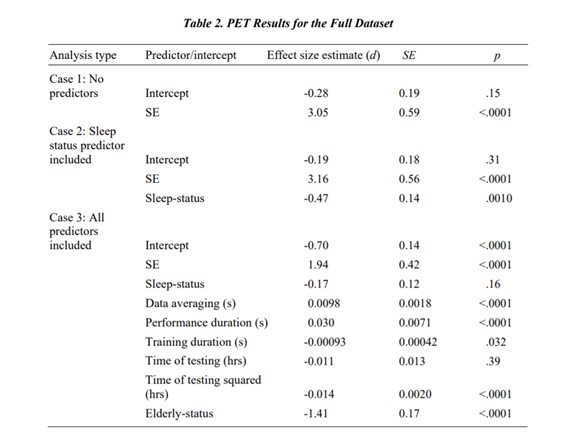

We explored the possibility that publication bias in the explicit motor sequence learning literature significantly inflates estimates of the sleep-specific performance gains, potentially leading researchers to falsely conclude that there is sleep-specific neural consolidation of that skill. We applied PET-PEESE weighted regression analyses to the 88 effect sizes that were included in a recent, comprehensive literature review. Basic PET analysis indicated pronounced publication bias; i.e., the effect sizes were strongly predicted by their standard error. When predictor variables that have been shown to both moderate the sleep gain effect and reduce unaccounted for effect size heterogeneity were included in that analysis, evidence for publication bias remained strong; the estimated post-sleep gain was negative, raising the possibility of forgetting rather than facilitation, and it was statistically indistinguishable from the estimated post-wake gain. In a qualitative review of a smaller group of more recent studies we observed that (1) small sample sizes – a major factor behind the publication bias in the earlier literature – are still the norm, (2) use of demonstrably flawed experimental design and analysis remains prevalent, and (3) when authors conclude in favor of sleep-specific consolidation, they do not cite the papers in which those methodological flaws have been demonstrated. Recommendations are made for reducing publication bias in future work on this topic.